One-shot image generation from text prompts used to be the stuff of science fiction but as OpenAi continues to make huge strides in DALL-E, the goal seems closer than ever.

Text alone can sometimes suffice to describe images, such as cartoons and photographs. However, creating them may require particular talents or hours of effort. As a result, a tool that can generate realistic pictures from natural language may allow humans to create rich and varied visual content with ease.

The capacity to modify photos using natural language also allows for iterative improvement and fine-grained control, both of which are critical for real world applications of image generation Ai.

In a recently released publication, OpenAi summarizes the advancements made to DALL-E since its release in 2021. In just over a year, OpenAi has shown dramatic improvements in text prompt comprehension and image generation quality. The point at which Ai generated content is indistinguishable from human creations may only be a few steps away.

The new research shows the power of Ai generated image content and is one more step towards the goal of General Artificial Intelligence for the OpenAi team.

In this article we will take a look at what DALL-E is, it's limitations and it's concerns for public use.

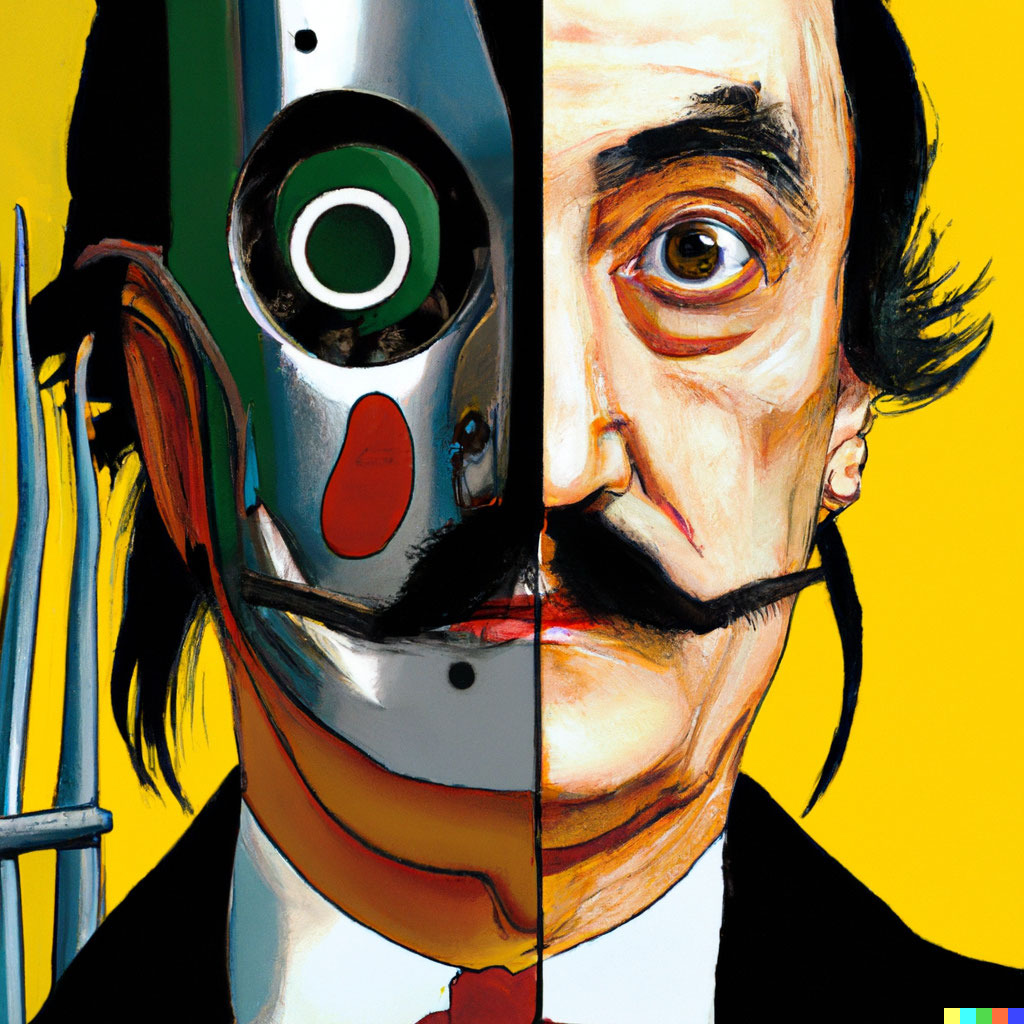

Above: Selected High Res images generated by the production model of DALL-E 2

Who is OpenAi?

Founded in 2016 by Elon Musk, Sam Alton and others; OpenAI’s mission is to ensure that artificial general intelligence (AGI) — by which they mean highly autonomous systems that outperform humans at most economically valuable work — benefits all of humanity.

The company is a non-profit research venture aimed at democratizing Ai and it's understanding throughout the world. Founding members have since stepped away allowing large institutional investors such as Microsoft to fuel the company's research and drive large leaps in Ai.

What is Text to Image Generation?

Image Generation sounds so bland however what we are really talking about is taking abstract text descriptions and producing relevant images humans can understand.

We can equate this process to an artist, particularly a commercial artist, who takes in a project description and then produces a variety of compositions from which the "best" is chosen.

Event the most talented designers and artists needs to conceptualize the composition before starting to create a final product. The DALL-E research gives us a peek inside the "brain" of the Ai to see how this accomplished using only machines. It's exciting progress that will undoubtedly have a huge impact on our culture and civilization.

What is Dall-e?

First off the name DALL-E comes from combination of Dali and Walle, the former being the famous artist Salvador Dali and the latter the cute robot of the 2008 film of the same name.

While the name implies a connection between artist and machine, in reality this is software designed to mimic human behavior and not some sort of cyborg!

DALL-E was first released in 2021 and illustrated the potential of Ai generated artwork has for creating content similar to human beings. The first release was alongside CLIP and GLIDE models which were used to create DALL and also showed immense potential in understanding text and generating images.

The release of DALL-E 2 shows continued improvements on the CLIP model, particularly the use of the inverse model called unCLIP.

DALL-E 2 is a powerful combination of the CLIP and diffusion models to create photorealistic image based on only text input or text and reference image inputs.

What are CLIP and GLIDE?

CLIP (Contrastive Language–Image Pre-training) was released with DALL-E 1 in 2021 and is a neural network which efficiently learns visual concepts from natural language supervision. It can be applied to any visual classification benchmark by simply providing the names of the visual categories to be recognized, similar to the “zero-shot” capabilities of GPT-2 and GPT-3.

GLIDE (Guided Language to Image Diffusion for Generation and Editing) implements the CLIP model and uses a diffusion model to generate images from what is essentially noise.

While the GLIDE model produced an impressive set of images from text prompts, it was limited to upscaling 64x64px images which further limits the final resolution. The new DALL-E model increases the base resolution and allows effective upscaling to 1024x1024px.

The GLIDE model CoLab (features a reduced set of hyperparameters and limited output resolution) produced some interesting results when I asked it to create impressionist style landscapes on mars.

Above: Variations of an input image by encoding with CLIP and then decoding with a diffusion model. The variations preserve both semantic information like presence of a clock in the painting and the overlapping strokes in the logo, as well as stylistic elements like the surrealism in the painting and the color gradients in the logo, while varying the non-essential details.

How does DALL-e Work?

DALL-E 2 is a a two-stage model: a prior that generates a CLIP image embedding given a text caption, and a decoder that generates an image conditioned on the image embedding. In other words, you can type what you want and DALL-E will give you some impressive options.

It can do this by utilizing the combination of CLIPs text to image association and a diffusion decoder which takes noisy images and gradually resolves toward the target text. We can see this process illustrated below.

What Types of Content Can DALL-e Create?

The limits of the software have yet to be tested since the model is not distributed widely however from the preliminary research reports it is clear that DALL-E excels in such areas as:

- Novel Content - create novel never before seen images

- Art - of various types including realist and impressionist

- In-Painting Replacements - replace content within an image by painting the area and describing the replacement.

- Concept Creation and Ideation - create concepts to base other art off of

- Abstractions and Variations - Create variations of size, content, composition and style from an original image

Human Reviewed Comparison Results

When the results were compared against real images by by real live humans the results were that a majority of reviewers favored the DALL-E generated content over the other types of generated and real content!

In fact according to the OpenAi paper nearly 90% of reviewers preferred DALL-E images for photorealism. This is an impressive result and significantly better than the original version released just 1 year ago.

Potential Uses of DALL-E 2

The hope of OpenAi is that DALL·E 2 will empower people to express themselves creatively. DALL·E 2 also helps us understand how advanced AI systems see and understand our world, which is critical to their mission of creating AI that benefits humanity.

I can easily imagine the type of NFT artwork that could be generated and it's not difficult to see how traditional artists could use the system for inspiration. There appears to be a large set of applications that DALL-E could address however it will takes years more research to find all the potential uses.

Potential Abuses of Image Generation

It is also pretty easy to see how the system could be abused. OpenAi has been proactive about this issue by restricting access during development and also restricting the types and amount of content that the models are trained on.

The potential for abuse of such software is significant as you can imagine placing celebrities in compromising situations or just generally misleading people, whether intentionally or not.

While any new technology posses the possibility of abuse, the applications for the improvement of human kind are vast and worth the risk in this writers opinion!

Summary

OpenAi continues to lead in the development of systems towards General Artificial Intelligence. The rapid progress on DALL-E, GPT-3 and other models shows that commercial applications of such software are expected to be so great it will change the course of human history. I think that's pretty neat stuff and I hope you do too!